612 reads

Data Science: The Cental Limit Theorem Explained

EN

Too Long; Didn't Read

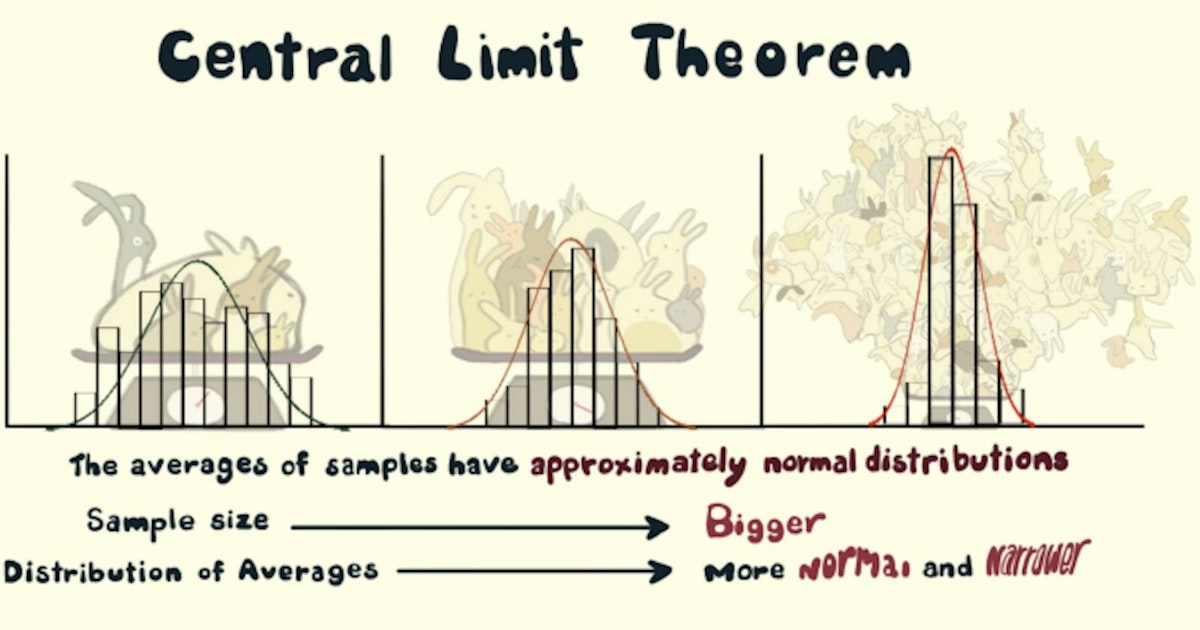

The Central Limit Theorem captures the following phenomenon: Take any distribution! (say a distribution of the number of passes in a football match) Start taking n samples from that distribution (say n = 5) multiple times [say m = 1000] times. Take the mean of each sample set (so we would have m = 1000 means) The distribution of means would be (more or less) normally distributed. (You will get that famous bell curve if you plot the means on the x-axis and their frequency on the y-axis.) Increase n to get a smaller standard deviation and increase m to get a better approximation to normal distribution.ex meta intern lol

TOPICS

Languages

THIS ARTICLE WAS FEATURED IN...

L O A D I N G

. . . comments & more!

. . . comments & more!

Share Your Thoughts